+

+

+

+

+

+

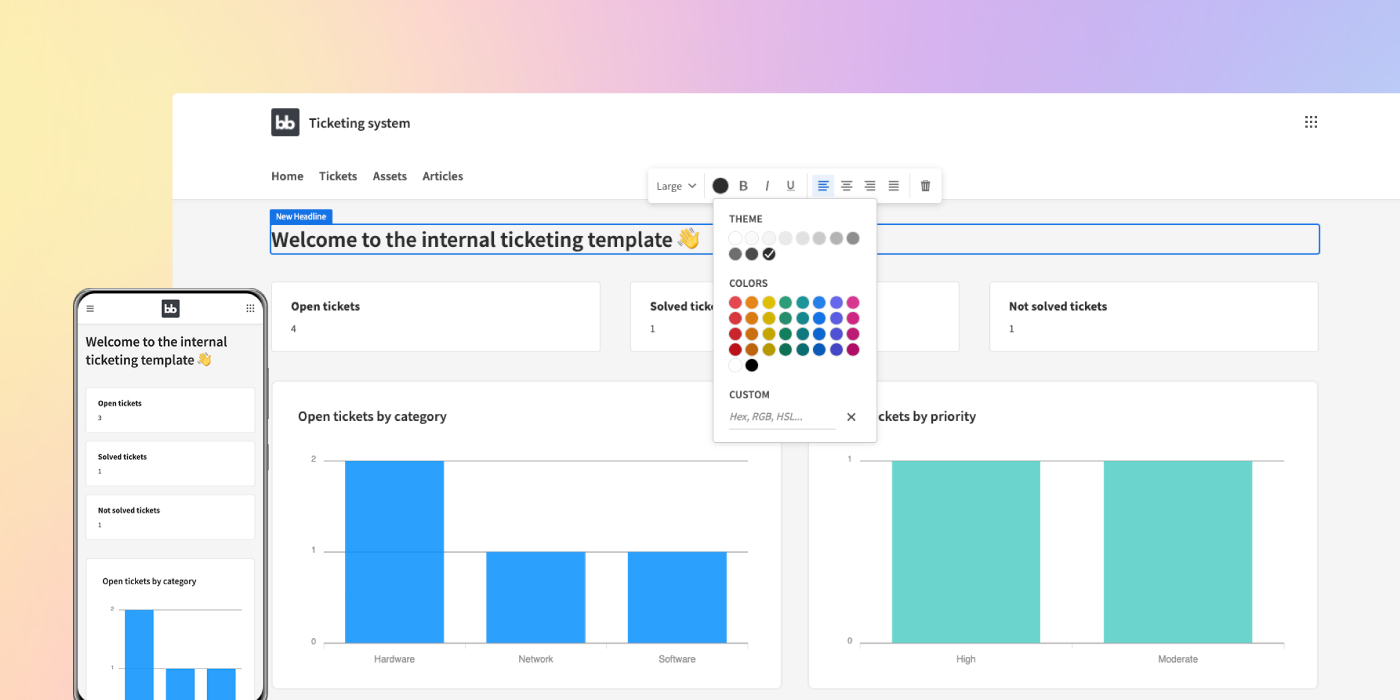

+ Budibase는 개발자와 IT 전문가가 몇 분 만에 맞춤형 애플리케이션을 구축하고 자동화할 수 있는 오픈 소스 로우코드 플랫폼입니다. +

+ +

+  +

+

+  +

+

+  +

+

+  +

+

+  +

+

Martin McKeaveney 💻 📖 ⚠️ 🚇 |

+ Michael Drury 📖 💻 ⚠️ 🚇 |

+ Andrew Kingston 📖 💻 ⚠️ 🎨 |

+ Michael Shanks 📖 💻 ⚠️ |

+ Kevin Åberg Kultalahti 📖 💻 ⚠️ |

+ Joe 📖 💻 🖋 🎨 |

+ Rory Powell 💻 📖 ⚠️ |

+ ||

Peter Clement 💻 📖 ⚠️ |

+ Conor_Mack 💻 ⚠️ |

+ pngwn 💻 ⚠️ |

+ HugoLd 💻 |

+ victoriasloan 💻 |

+ yashank09 💻 |

+ SOVLOOKUP 💻 |

+ seoulaja 🌍 |

+ Maurits Lourens ⚠️ 💻 |

+