diff --git a/i18n/README.kr.md b/i18n/README.kr.md

new file mode 100644

index 0000000000..09fc83569b

--- /dev/null

+++ b/i18n/README.kr.md

@@ -0,0 +1,221 @@

+

+

+  +

+

+

+

+

+ Budibase

+

+

+ 자체 인프라에서 몇 분 만에 맞춤형 비즈니스 도구를 구축하세요.

+

+

+ Budibase는 개발자와 IT 전문가가 몇 분 만에 맞춤형 애플리케이션을 구축하고 자동화할 수 있는 오픈 소스 로우코드 플랫폼입니다.

+

+

+

+ 🤖 🎨 🚀

+

+

+

+  +

+

+

+

+

+  +

+

+

+

+

+  +

+

+

+

+

+  +

+

+

+  +

+

+

+  +

+

+

+

+

+

+ 소개

+ ·

+ 문서

+ ·

+ 기능 요청

+ ·

+ 버그 보고

+ ·

+ 지원: 토론

+

+

+

+## ✨ 특징

+

+### "실제" 소프트웨어를 구축할 수 있습니다.

+Budibase를 사용하면 고성능 단일 페이지 애플리케이션을 구축할 수 있습니다. 또한 반응형 디자인으로 제작하여 사용자에게 멋진 경험을 제공할 수 있습니다.

+

+

+### 오픈 소스 및 확장성

+Budibase는 오픈소스이며, GPL v3 라이선스에 따라 공개되어 있습니다. 이는 Budibase가 항상 당신 곁에 있다는 안도감을 줄 것입니다. 그리고 우리는 개발자 친화적인 환경을 제공하고 있기 때문에, 당신은 원하는 만큼 소스 코드를 포크하여 수정하거나 Budibase에 직접 기여할 수 있습니다.

+

+

+### 기존 데이터 또는 처음부터 시작

+Budibase를 사용하면 다음과 같은 여러 소스에서 데이터를 가져올 수 있습니다: MondoDB, CouchDB, PostgreSQL, MySQL, Airtable, S3, DynamoDB 또는 REST API.

+

+또는 원하는 경우 외부 도구 없이도 Budibase를 사용하여 처음부터 시작하여 자체 애플리케이션을 구축할 수 있습니다.[데이터 소스 제안](https://github.com/Budibase/budibase/discussions?discussions_q=category%3AIdeas).

+

+

+  +

+

+

+

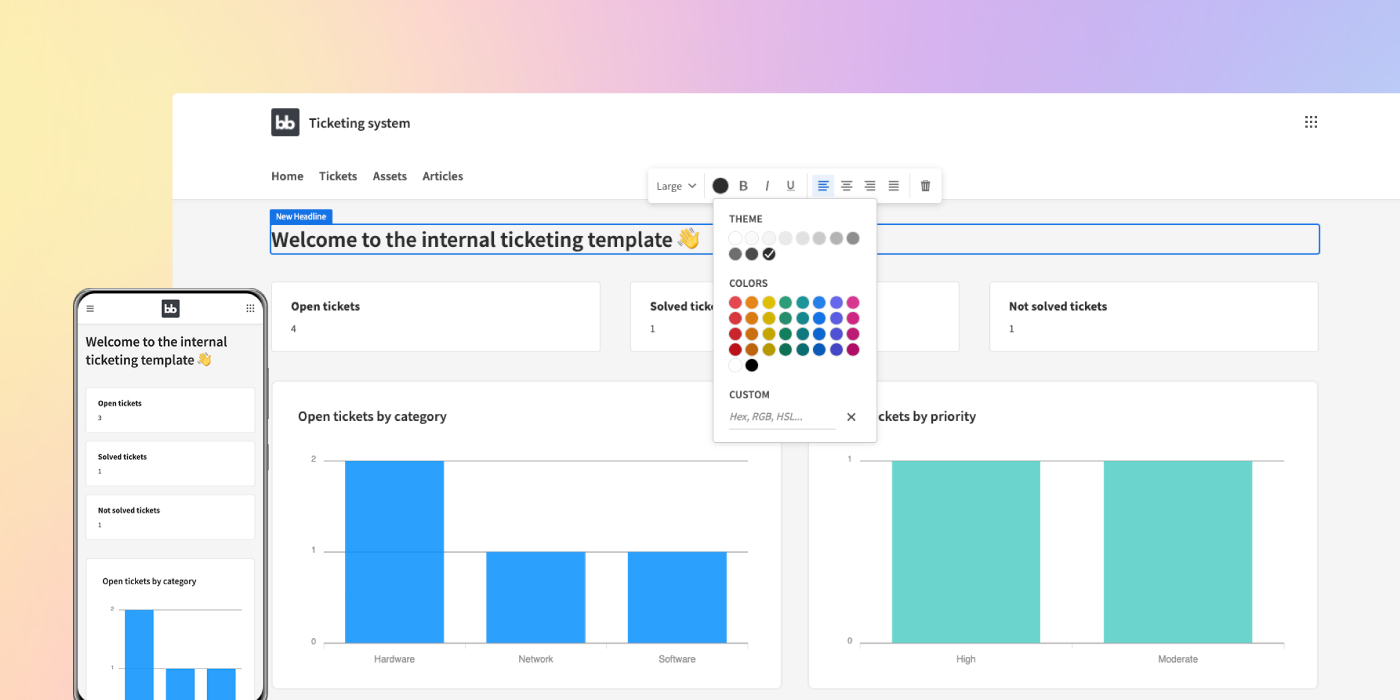

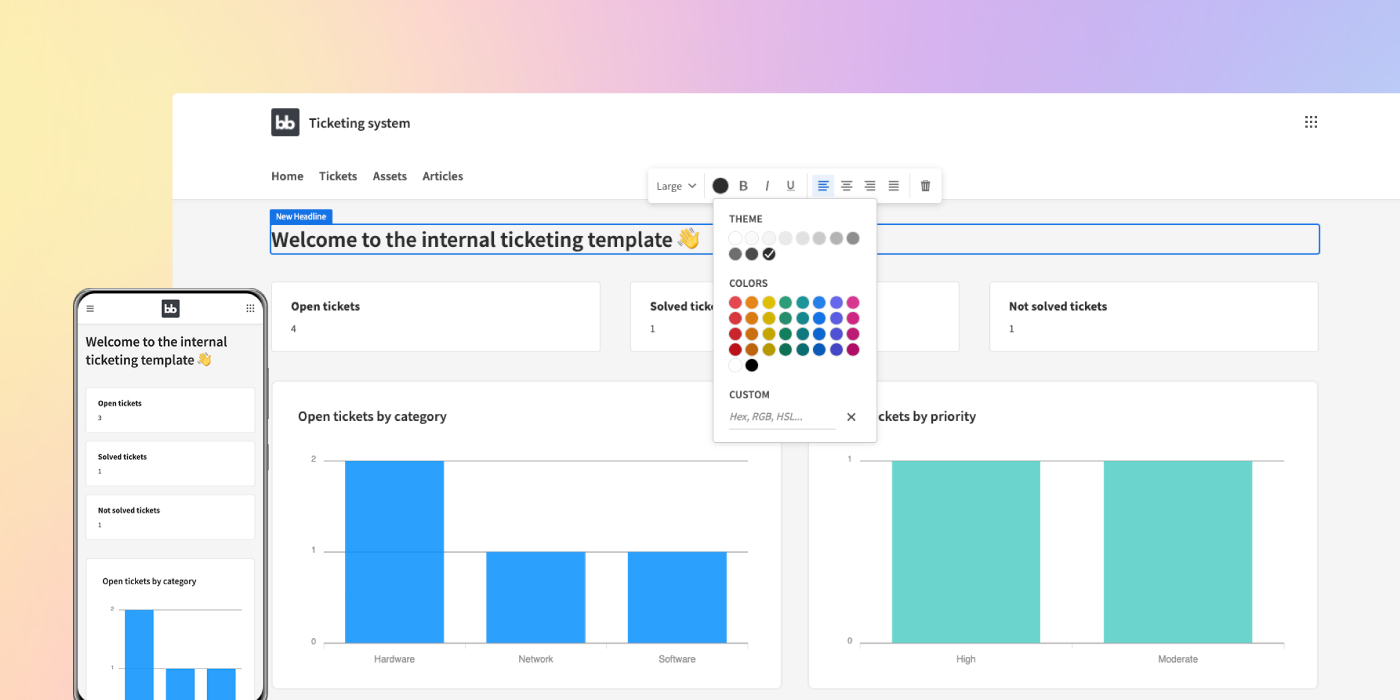

+### 강력한 내장 구성 요소로 애플리케이션을 설계하고 구축할 수 있습니다.

+

+Budibase에는 아름답게 디자인된 강력한 컴포넌트들이 제공되며, 이를 사용하여 UI를 쉽게 구축할 수 있습니다. 또한, CSS를 통한 스타일링 옵션도 풍부하게 제공되어 보다 창의적인 표현도 가능하다.

+ [Request new component](https://github.com/Budibase/budibase/discussions?discussions_q=category%3AIdeas).

+

+

+  +

+

+

+

+### 프로세스를 자동화하고, 다른 도구와 연동하고, 웹훅으로 연결하세요!

+워크플로우와 수동 프로세스를 자동화하여 시간을 절약하세요. 웹훅 이벤트 연결부터 이메일 자동화까지, Budibase에 수행할 작업을 지시하기만 하면 자동으로 처리됩니다. [새로운 자동화 만들기](https://github.com/Budibase/automations)또는[새로운 자동화를 요청할 수 있습니다](https://github.com/Budibase/budibase/discussions?discussions_q=category%3AIdeas).

+

+

+  +

+

+

+

+### 선호하는 도구

+Budibase는 사용자의 선호도에 따라 애플리케이션을 구축할 수 있는 다양한 도구를 통합하고 있습니다.

+

+

+  +

+

+

+

+### 관리자의 천국

+Budibase는 어떤 규모의 프로젝트에도 유연하게 대응할 수 있으며, Budibase를 사용하면 개인 또는 조직의 서버에서 자체 호스팅하고 사용자, 온보딩, SMTP, 앱, 그룹, 테마 등을 한꺼번에 관리할 수 있습니다. 또한, 사용자나 그룹에 앱 포털을 제공하고 그룹 관리자에게 사용자 관리를 맡길 수도 있다.

+- 프로모션 비디오: https://youtu.be/xoljVpty_Kw

+

+

+

+## 🏁 시작

+

+Docker, Kubernetes 또는 Digital Ocean을 사용하여 자체 인프라에서 Budibase를 호스팅하거나, 걱정 없이 빠르게 애플리케이션을 구축하려는 경우 클라우드에서 Budibase를 사용할 수 있습니다.

+

+### [Budibase 셀프 호스팅으로 시작하기](https://docs.budibase.com/docs/hosting-methods)

+

+- [Docker - single ARM compatible image](https://docs.budibase.com/docs/docker)

+- [Docker Compose](https://docs.budibase.com/docs/docker-compose)

+- [Kubernetes](https://docs.budibase.com/docs/kubernetes-k8s)

+- [Digital Ocean](https://docs.budibase.com/docs/digitalocean)

+- [Portainer](https://docs.budibase.com/docs/portainer)

+

+

+### [클라우드에서 Budibase 시작하기](https://budibase.com)

+

+

+

+## 🎓 Budibase 알아보기

+

+문서 [documentacion de Budibase](https://docs.budibase.com/docs).

+

+

+

+

+

+## 💬 커뮤니티

+

+질문하고, 다른 사람을 돕고, 다른 Budibase 사용자와 즐거운 대화를 나눌 수 있는 Budibase 커뮤니티에 여러분을 초대합니다.

+[깃허브 토론](https://github.com/Budibase/budibase/discussions)

+

+

+

+## ❗ 행동강령

+

+Budibase 는 모든 계층의 사람들을 환영하고 상호 존중하는 환경을 제공하는 데 특별한 주의를 기울이고 있습니다. 저희는 커뮤니티에도 같은 기대를 가지고 있습니다.

+[**행동 강령**](https://github.com/Budibase/budibase/blob/HEAD/.github/CODE_OF_CONDUCT.md).

+

+

+

+

+

+## 🙌 Contribuir en Budibase

+

+버그 신고부터 코드의 버그 수정에 이르기까지 모든 기여를 감사하고 환영합니다. 새로운 기능을 구현하거나 API를 변경할 계획이 있다면 [여기에 새 메시지](https://github.com/Budibase/budibase/issues),

+이렇게 하면 여러분의 노력이 헛되지 않도록 보장할 수 있습니다.

+

+여기에는 다음을 위해 Budibase 환경을 설정하는 방법에 대한 지침이 나와 있습니다. [여기를 클릭하세요](https://github.com/Budibase/budibase/tree/HEAD/docs/CONTRIBUTING.md).

+

+### 어디서부터 시작해야 할지 혼란스러우신가요?

+이곳은 기여를 시작하기에 최적의 장소입니다! [First time issues project](https://github.com/Budibase/budibase/projects/22).

+

+### 리포지토리 구성

+

+Budibase는 Lerna에서 관리하는 단일 리포지토리입니다. Lerna는 변경 사항이 있을 때마다 이를 동기화하여 Budibase 패키지를 빌드하고 게시합니다. 크게 보면 이러한 패키지가 Budibase를 구성하는 패키지입니다:

+

+- [packages/builder](https://github.com/Budibase/budibase/tree/HEAD/packages/builder) - budibase builder 클라이언트 측의 svelte 애플리케이션 코드가 포함되어 있습니다.

+

+- [packages/client](https://github.com/Budibase/budibase/tree/HEAD/packages/client) - budibase builder 클라이언트 측의 svelte 애플리케이션 코드가 포함되어 있습니다.

+

+- [packages/server](https://github.com/Budibase/budibase/tree/HEAD/packages/server) - Budibase의 서버 부분입니다. 이 Koa 애플리케이션은 빌더에게 Budibase 애플리케이션을 생성하는 데 필요한 것을 제공하는 역할을 합니다. 또한 데이터베이스 및 파일 저장소와 상호 작용할 수 있는 API를 제공합니다.

+

+자세한 내용은 다음 문서를 참조하세요. [CONTRIBUTING.md](https://github.com/Budibase/budibase/blob/HEAD/docs/CONTRIBUTING.md)

+

+

+

+

+## 📝 라이선스

+

+Budibase는 오픈 소스이며, 라이선스는 다음과 같습니다 [GPL v3](https://www.gnu.org/licenses/gpl-3.0.en.html). 클라이언트 및 컴포넌트 라이브러리는 다음과 같이 라이선스가 부여됩니다. [MPL](https://directory.fsf.org/wiki/License:MPL-2.0) - 이렇게 하면 빌드한 애플리케이션에 원하는 대로 라이선스를 부여할 수 있습니다.

+

+

+

+## ⭐ 스타 수의 역사

+

+[](https://starchart.cc/Budibase/budibase)

+

+빌더 업데이트 중 문제가 발생하는 경우 [여기](https://github.com/Budibase/budibase/blob/HEAD/docs/CONTRIBUTING.md#troubleshooting) 를 참고하여 환경을 정리해 주세요.

+

+

+

+## Contributors ✨

+

+훌륭한 여러분께 감사할 따름입니다. ([emoji key](https://allcontributors.org/docs/en/emoji-key)):

+

+

+

+

+

+

+

+

+

+

+

+이 프로젝트는 다음 사양을 따릅니다. [all-contributors](https://github.com/all-contributors/all-contributors).

+모든 종류의 기여를 환영합니다!

diff --git a/lerna.json b/lerna.json

index 4807a80646..c06173fe04 100644

--- a/lerna.json

+++ b/lerna.json

@@ -1,5 +1,5 @@

{

- "version": "2.20.14",

+ "version": "2.21.0",

"npmClient": "yarn",

"packages": [

"packages/*",

diff --git a/packages/backend-core/src/middleware/errorHandling.ts b/packages/backend-core/src/middleware/errorHandling.ts

index ebdd4107e9..2b8f7195ed 100644

--- a/packages/backend-core/src/middleware/errorHandling.ts

+++ b/packages/backend-core/src/middleware/errorHandling.ts

@@ -1,5 +1,6 @@

import { APIError } from "@budibase/types"

import * as errors from "../errors"

+import environment from "../environment"

export async function errorHandling(ctx: any, next: any) {

try {

@@ -14,15 +15,19 @@ export async function errorHandling(ctx: any, next: any) {

console.error(err)

}

- const error = errors.getPublicError(err)

- const body: APIError = {

+ let error: APIError = {

message: err.message,

status: status,

validationErrors: err.validation,

- error,

+ error: errors.getPublicError(err),

}

- ctx.body = body

+ if (environment.isTest() && ctx.headers["x-budibase-include-stacktrace"]) {

+ // @ts-ignore

+ error.stack = err.stack

+ }

+

+ ctx.body = error

}

}

diff --git a/packages/server/scripts/integrations/postgres/reset.sh b/packages/server/scripts/integrations/postgres/reset.sh

index 32778bd11f..8deb01cdf8 100755

--- a/packages/server/scripts/integrations/postgres/reset.sh

+++ b/packages/server/scripts/integrations/postgres/reset.sh

@@ -1,3 +1,3 @@

#!/bin/bash

-docker-compose down

+docker-compose down -v

docker volume prune -f

diff --git a/packages/server/src/api/controllers/permission.ts b/packages/server/src/api/controllers/permission.ts

index e2bd6c40e5..cdfa6d8b1c 100644

--- a/packages/server/src/api/controllers/permission.ts

+++ b/packages/server/src/api/controllers/permission.ts

@@ -7,6 +7,10 @@ import {

GetResourcePermsResponse,

ResourcePermissionInfo,

GetDependantResourcesResponse,

+ AddPermissionResponse,

+ AddPermissionRequest,

+ RemovePermissionRequest,

+ RemovePermissionResponse,

} from "@budibase/types"

import { getRoleParams } from "../../db/utils"

import {

@@ -16,9 +20,9 @@ import {

import { removeFromArray } from "../../utilities"

import sdk from "../../sdk"

-const PermissionUpdateType = {

- REMOVE: "remove",

- ADD: "add",

+const enum PermissionUpdateType {

+ REMOVE = "remove",

+ ADD = "add",

}

const SUPPORTED_LEVELS = CURRENTLY_SUPPORTED_LEVELS

@@ -39,7 +43,7 @@ async function updatePermissionOnRole(

resourceId,

level,

}: { roleId: string; resourceId: string; level: PermissionLevel },

- updateType: string

+ updateType: PermissionUpdateType

) {

const allowedAction = await sdk.permissions.resourceActionAllowed({

resourceId,

@@ -107,11 +111,15 @@ async function updatePermissionOnRole(

}

const response = await db.bulkDocs(docUpdates)

- return response.map((resp: any) => {

+ return response.map(resp => {

const version = docUpdates.find(role => role._id === resp.id)?.version

- resp._id = roles.getExternalRoleID(resp.id, version)

- delete resp.id

- return resp

+ const _id = roles.getExternalRoleID(resp.id, version)

+ return {

+ _id,

+ rev: resp.rev,

+ error: resp.error,

+ reason: resp.reason,

+ }

})

}

@@ -189,13 +197,14 @@ export async function getDependantResources(

}

}

-export async function addPermission(ctx: UserCtx) {

- ctx.body = await updatePermissionOnRole(ctx.params, PermissionUpdateType.ADD)

+export async function addPermission(ctx: UserCtx) {

+ const params: AddPermissionRequest = ctx.params

+ ctx.body = await updatePermissionOnRole(params, PermissionUpdateType.ADD)

}

-export async function removePermission(ctx: UserCtx) {

- ctx.body = await updatePermissionOnRole(

- ctx.params,

- PermissionUpdateType.REMOVE

- )

+export async function removePermission(

+ ctx: UserCtx

+) {

+ const params: RemovePermissionRequest = ctx.params

+ ctx.body = await updatePermissionOnRole(params, PermissionUpdateType.REMOVE)

}

diff --git a/packages/server/src/api/controllers/query/index.ts b/packages/server/src/api/controllers/query/index.ts

index 973718ba48..3c21537484 100644

--- a/packages/server/src/api/controllers/query/index.ts

+++ b/packages/server/src/api/controllers/query/index.ts

@@ -17,10 +17,12 @@ import {

QueryPreview,

QuerySchema,

FieldType,

- type ExecuteQueryRequest,

- type ExecuteQueryResponse,

- type Row,

+ ExecuteQueryRequest,

+ ExecuteQueryResponse,

+ Row,

QueryParameter,

+ PreviewQueryRequest,

+ PreviewQueryResponse,

} from "@budibase/types"

import { ValidQueryNameRegex, utils as JsonUtils } from "@budibase/shared-core"

@@ -134,14 +136,16 @@ function enrichParameters(

return requestParameters

}

-export async function preview(ctx: UserCtx) {

+export async function preview(

+ ctx: UserCtx

+) {

const { datasource, envVars } = await sdk.datasources.getWithEnvVars(

ctx.request.body.datasourceId

)

- const query: QueryPreview = ctx.request.body

// preview may not have a queryId as it hasn't been saved, but if it does

// this stops dynamic variables from calling the same query

- const { fields, parameters, queryVerb, transformer, queryId, schema } = query

+ const { fields, parameters, queryVerb, transformer, queryId, schema } =

+ ctx.request.body

let existingSchema = schema

if (queryId && !existingSchema) {

@@ -266,9 +270,7 @@ export async function preview(ctx: UserCtx) {

},

}

- const { rows, keys, info, extra } = (await Runner.run(

- inputs

- )) as QueryResponse

+ const { rows, keys, info, extra } = await Runner.run(inputs)

const { previewSchema, nestedSchemaFields } = getSchemaFields(rows, keys)

// if existing schema, update to include any previous schema keys

@@ -281,7 +283,7 @@ export async function preview(ctx: UserCtx) {

}

// remove configuration before sending event

delete datasource.config

- await events.query.previewed(datasource, query)

+ await events.query.previewed(datasource, ctx.request.body)

ctx.body = {

rows,

nestedSchemaFields,

@@ -295,7 +297,10 @@ export async function preview(ctx: UserCtx) {

}

async function execute(

- ctx: UserCtx,

+ ctx: UserCtx<

+ ExecuteQueryRequest,

+ ExecuteQueryResponse | Record[]

+ >,

opts: any = { rowsOnly: false, isAutomation: false }

) {

const db = context.getAppDB()

@@ -350,18 +355,23 @@ async function execute(

}

}

-export async function executeV1(ctx: UserCtx) {

+export async function executeV1(

+ ctx: UserCtx[]>

+) {

return execute(ctx, { rowsOnly: true, isAutomation: false })

}

export async function executeV2(

- ctx: UserCtx,

+ ctx: UserCtx<

+ ExecuteQueryRequest,

+ ExecuteQueryResponse | Record[]

+ >,

{ isAutomation }: { isAutomation?: boolean } = {}

) {

return execute(ctx, { rowsOnly: false, isAutomation })

}

-const removeDynamicVariables = async (queryId: any) => {

+const removeDynamicVariables = async (queryId: string) => {

const db = context.getAppDB()

const query = await db.get(queryId)

const datasource = await sdk.datasources.get(query.datasourceId)

@@ -384,7 +394,7 @@ const removeDynamicVariables = async (queryId: any) => {

export async function destroy(ctx: UserCtx) {

const db = context.getAppDB()

- const queryId = ctx.params.queryId

+ const queryId = ctx.params.queryId as string

await removeDynamicVariables(queryId)

const query = await db.get(queryId)

const datasource = await sdk.datasources.get(query.datasourceId)

diff --git a/packages/server/src/api/controllers/row/ExternalRequest.ts b/packages/server/src/api/controllers/row/ExternalRequest.ts

index b7dc02c0db..685af4e98e 100644

--- a/packages/server/src/api/controllers/row/ExternalRequest.ts

+++ b/packages/server/src/api/controllers/row/ExternalRequest.ts

@@ -7,6 +7,7 @@ import {

FilterType,

IncludeRelationship,

ManyToManyRelationshipFieldMetadata,

+ ManyToOneRelationshipFieldMetadata,

OneToManyRelationshipFieldMetadata,

Operation,

PaginationJson,

@@ -18,6 +19,7 @@ import {

SortJson,

SortType,

Table,

+ isManyToOne,

} from "@budibase/types"

import {

breakExternalTableId,

@@ -32,7 +34,9 @@ import { processObjectSync } from "@budibase/string-templates"

import { cloneDeep } from "lodash/fp"

import { processDates, processFormulas } from "../../../utilities/rowProcessor"

import { db as dbCore } from "@budibase/backend-core"

+import AliasTables from "./alias"

import sdk from "../../../sdk"

+import env from "../../../environment"

export interface ManyRelationship {

tableId?: string

@@ -101,6 +105,39 @@ function buildFilters(

}

}

+async function removeManyToManyRelationships(

+ rowId: string,

+ table: Table,

+ colName: string

+) {

+ const tableId = table._id!

+ const filters = buildFilters(rowId, {}, table)

+ // safety check, if there are no filters on deletion bad things happen

+ if (Object.keys(filters).length !== 0) {

+ return getDatasourceAndQuery({

+ endpoint: getEndpoint(tableId, Operation.DELETE),

+ body: { [colName]: null },

+ filters,

+ })

+ } else {

+ return []

+ }

+}

+

+async function removeOneToManyRelationships(rowId: string, table: Table) {

+ const tableId = table._id!

+ const filters = buildFilters(rowId, {}, table)

+ // safety check, if there are no filters on deletion bad things happen

+ if (Object.keys(filters).length !== 0) {

+ return getDatasourceAndQuery({

+ endpoint: getEndpoint(tableId, Operation.UPDATE),

+ filters,

+ })

+ } else {

+ return []

+ }

+}

+

/**

* This function checks the incoming parameters to make sure all the inputs are

* valid based on on the table schema. The main thing this is looking for is when a

@@ -178,13 +215,13 @@ function generateIdForRow(

function getEndpoint(tableId: string | undefined, operation: string) {

if (!tableId) {

- return {}

+ throw new Error("Cannot get endpoint information - no table ID specified")

}

const { datasourceId, tableName } = breakExternalTableId(tableId)

return {

- datasourceId,

- entityId: tableName,

- operation,

+ datasourceId: datasourceId!,

+ entityId: tableName!,

+ operation: operation as Operation,

}

}

@@ -304,6 +341,18 @@ export class ExternalRequest {

}

}

+ async getRow(table: Table, rowId: string): Promise {

+ const response = await getDatasourceAndQuery({

+ endpoint: getEndpoint(table._id!, Operation.READ),

+ filters: buildFilters(rowId, {}, table),

+ })

+ if (Array.isArray(response) && response.length > 0) {

+ return response[0]

+ } else {

+ throw new Error(`Cannot fetch row by ID "${rowId}"`)

+ }

+ }

+

inputProcessing(row: Row | undefined, table: Table) {

if (!row) {

return { row, manyRelationships: [] }

@@ -571,7 +620,9 @@ export class ExternalRequest {

* information.

*/

async lookupRelations(tableId: string, row: Row) {

- const related: { [key: string]: any } = {}

+ const related: {

+ [key: string]: { rows: Row[]; isMany: boolean; tableId: string }

+ } = {}

const { tableName } = breakExternalTableId(tableId)

if (!tableName) {

return related

@@ -589,14 +640,26 @@ export class ExternalRequest {

) {

continue

}

- const isMany = field.relationshipType === RelationshipType.MANY_TO_MANY

- const tableId = isMany ? field.through : field.tableId

+ let tableId: string | undefined,

+ lookupField: string | undefined,

+ fieldName: string | undefined

+ if (isManyToMany(field)) {

+ tableId = field.through

+ lookupField = primaryKey

+ fieldName = field.throughTo || primaryKey

+ } else if (isManyToOne(field)) {

+ tableId = field.tableId

+ lookupField = field.foreignKey

+ fieldName = field.fieldName

+ }

+ if (!tableId || !lookupField || !fieldName) {

+ throw new Error(

+ "Unable to lookup relationships - undefined column properties."

+ )

+ }

const { tableName: relatedTableName } = breakExternalTableId(tableId)

// @ts-ignore

const linkPrimaryKey = this.tables[relatedTableName].primary[0]

-

- const lookupField = isMany ? primaryKey : field.foreignKey

- const fieldName = isMany ? field.throughTo || primaryKey : field.fieldName

if (!lookupField || !row[lookupField]) {

continue

}

@@ -609,9 +672,12 @@ export class ExternalRequest {

},

})

// this is the response from knex if no rows found

- const rows = !response[0].read ? response : []

- const storeTo = isMany ? field.throughFrom || linkPrimaryKey : fieldName

- related[storeTo] = { rows, isMany, tableId }

+ const rows: Row[] =

+ !Array.isArray(response) || response?.[0].read ? [] : response

+ const storeTo = isManyToMany(field)

+ ? field.throughFrom || linkPrimaryKey

+ : fieldName

+ related[storeTo] = { rows, isMany: isManyToMany(field), tableId }

}

return related

}

@@ -697,24 +763,43 @@ export class ExternalRequest {

continue

}

for (let row of rows) {

- const filters = buildFilters(generateIdForRow(row, table), {}, table)

- // safety check, if there are no filters on deletion bad things happen

- if (Object.keys(filters).length !== 0) {

- const op = isMany ? Operation.DELETE : Operation.UPDATE

- const body = isMany ? null : { [colName]: null }

- promises.push(

- getDatasourceAndQuery({

- endpoint: getEndpoint(tableId, op),

- body,

- filters,

- })

- )

+ const rowId = generateIdForRow(row, table)

+ const promise: Promise = isMany

+ ? removeManyToManyRelationships(rowId, table, colName)

+ : removeOneToManyRelationships(rowId, table)

+ if (promise) {

+ promises.push(promise)

}

}

}

await Promise.all(promises)

}

+ async removeRelationshipsToRow(table: Table, rowId: string) {

+ const row = await this.getRow(table, rowId)

+ const related = await this.lookupRelations(table._id!, row)

+ for (let column of Object.values(table.schema)) {

+ const relationshipColumn = column as RelationshipFieldMetadata

+ if (!isManyToOne(relationshipColumn)) {

+ continue

+ }

+ const { rows, isMany, tableId } = related[relationshipColumn.fieldName]

+ const table = this.getTable(tableId)!

+ await Promise.all(

+ rows.map(row => {

+ const rowId = generateIdForRow(row, table)

+ return isMany

+ ? removeManyToManyRelationships(

+ rowId,

+ table,

+ relationshipColumn.fieldName

+ )

+ : removeOneToManyRelationships(rowId, table)

+ })

+ )

+ }

+ }

+

/**

* This function is a bit crazy, but the exact purpose of it is to protect against the scenario in which

* you have column overlap in relationships, e.g. we join a few different tables and they all have the

@@ -804,7 +889,7 @@ export class ExternalRequest {

}

let json = {

endpoint: {

- datasourceId,

+ datasourceId: datasourceId!,

entityId: tableName,

operation,

},

@@ -826,17 +911,30 @@ export class ExternalRequest {

},

}

- // can't really use response right now

- const response = await getDatasourceAndQuery(json)

- // handle many to many relationships now if we know the ID (could be auto increment)

+ // remove any relationships that could block deletion

+ if (operation === Operation.DELETE && id) {

+ await this.removeRelationshipsToRow(table, generateRowIdField(id))

+ }

+

+ // aliasing can be disabled fully if desired

+ let response

+ if (env.SQL_ALIASING_DISABLE) {

+ response = await getDatasourceAndQuery(json)

+ } else {

+ const aliasing = new AliasTables(Object.keys(this.tables))

+ response = await aliasing.queryWithAliasing(json)

+ }

+

+ const responseRows = Array.isArray(response) ? response : []

+ // handle many-to-many relationships now if we know the ID (could be auto increment)

if (operation !== Operation.READ) {

await this.handleManyRelationships(

table._id || "",

- response[0],

+ responseRows[0],

processed.manyRelationships

)

}

- const output = this.outputProcessing(response, table, relationships)

+ const output = this.outputProcessing(responseRows, table, relationships)

// if reading it'll just be an array of rows, return whole thing

if (operation === Operation.READ) {

return (

diff --git a/packages/server/src/api/controllers/row/alias.ts b/packages/server/src/api/controllers/row/alias.ts

new file mode 100644

index 0000000000..9658a0d638

--- /dev/null

+++ b/packages/server/src/api/controllers/row/alias.ts

@@ -0,0 +1,166 @@

+import {

+ QueryJson,

+ SearchFilters,

+ Table,

+ Row,

+ DatasourcePlusQueryResponse,

+} from "@budibase/types"

+import { getDatasourceAndQuery } from "../../../sdk/app/rows/utils"

+import { cloneDeep } from "lodash"

+

+class CharSequence {

+ static alphabet = "abcdefghijklmnopqrstuvwxyz"

+ counters: number[]

+

+ constructor() {

+ this.counters = [0]

+ }

+

+ getCharacter(): string {

+ const char = this.counters.map(i => CharSequence.alphabet[i]).join("")

+ for (let i = this.counters.length - 1; i >= 0; i--) {

+ if (this.counters[i] < CharSequence.alphabet.length - 1) {

+ this.counters[i]++

+ return char

+ }

+ this.counters[i] = 0

+ }

+ this.counters.unshift(0)

+ return char

+ }

+}

+

+export default class AliasTables {

+ aliases: Record

+ tableAliases: Record

+ tableNames: string[]

+ charSeq: CharSequence

+

+ constructor(tableNames: string[]) {

+ this.tableNames = tableNames

+ this.aliases = {}

+ this.tableAliases = {}

+ this.charSeq = new CharSequence()

+ }

+

+ getAlias(tableName: string) {

+ if (this.aliases[tableName]) {

+ return this.aliases[tableName]

+ }

+ const char = this.charSeq.getCharacter()

+ this.aliases[tableName] = char

+ this.tableAliases[char] = tableName

+ return char

+ }

+

+ aliasField(field: string) {

+ const tableNames = this.tableNames

+ if (field.includes(".")) {

+ const [tableName, column] = field.split(".")

+ const foundTableName = tableNames.find(name => {

+ const idx = tableName.indexOf(name)

+ if (idx === -1 || idx > 1) {

+ return

+ }

+ return Math.abs(tableName.length - name.length) <= 2

+ })

+ if (foundTableName) {

+ const aliasedTableName = tableName.replace(

+ foundTableName,

+ this.getAlias(foundTableName)

+ )

+ field = `${aliasedTableName}.${column}`

+ }

+ }

+ return field

+ }

+

+ reverse(rows: T): T {

+ const process = (row: Row) => {

+ const final: Row = {}

+ for (let [key, value] of Object.entries(row)) {

+ if (!key.includes(".")) {

+ final[key] = value

+ } else {

+ const [alias, column] = key.split(".")

+ const tableName = this.tableAliases[alias] || alias

+ final[`${tableName}.${column}`] = value

+ }

+ }

+ return final

+ }

+ if (Array.isArray(rows)) {

+ return rows.map(row => process(row)) as T

+ } else {

+ return process(rows) as T

+ }

+ }

+

+ aliasMap(tableNames: (string | undefined)[]) {

+ const map: Record = {}

+ for (let tableName of tableNames) {

+ if (tableName) {

+ map[tableName] = this.getAlias(tableName)

+ }

+ }

+ return map

+ }

+

+ async queryWithAliasing(json: QueryJson): DatasourcePlusQueryResponse {

+ json = cloneDeep(json)

+ const aliasTable = (table: Table) => ({

+ ...table,

+ name: this.getAlias(table.name),

+ })

+ // run through the query json to update anywhere a table may be used

+ if (json.resource?.fields) {

+ json.resource.fields = json.resource.fields.map(field =>

+ this.aliasField(field)

+ )

+ }

+ if (json.filters) {

+ for (let [filterKey, filter] of Object.entries(json.filters)) {

+ if (typeof filter !== "object") {

+ continue

+ }

+ const aliasedFilters: typeof filter = {}

+ for (let key of Object.keys(filter)) {

+ aliasedFilters[this.aliasField(key)] = filter[key]

+ }

+ json.filters[filterKey as keyof SearchFilters] = aliasedFilters

+ }

+ }

+ if (json.relationships) {

+ json.relationships = json.relationships.map(relationship => ({

+ ...relationship,

+ aliases: this.aliasMap([

+ relationship.through,

+ relationship.tableName,

+ json.endpoint.entityId,

+ ]),

+ }))

+ }

+ if (json.meta?.table) {

+ json.meta.table = aliasTable(json.meta.table)

+ }

+ if (json.meta?.tables) {

+ const aliasedTables: Record = {}

+ for (let [tableName, table] of Object.entries(json.meta.tables)) {

+ aliasedTables[this.getAlias(tableName)] = aliasTable(table)

+ }

+ json.meta.tables = aliasedTables

+ }

+ // invert and return

+ const invertedTableAliases: Record = {}

+ for (let [key, value] of Object.entries(this.tableAliases)) {

+ invertedTableAliases[value] = key

+ }

+ json.tableAliases = invertedTableAliases

+ const response = await getDatasourceAndQuery(json)

+ if (Array.isArray(response)) {

+ return this.reverse(response)

+ } else {

+ return response

+ }

+ }

+}

diff --git a/packages/server/src/api/controllers/row/index.ts b/packages/server/src/api/controllers/row/index.ts

index ec56919d12..54c294c42b 100644

--- a/packages/server/src/api/controllers/row/index.ts

+++ b/packages/server/src/api/controllers/row/index.ts

@@ -211,7 +211,7 @@ export async function validate(ctx: Ctx) {

}

}

-export async function fetchEnrichedRow(ctx: any) {

+export async function fetchEnrichedRow(ctx: UserCtx) {

const tableId = utils.getTableId(ctx)

ctx.body = await pickApi(tableId).fetchEnrichedRow(ctx)

}

diff --git a/packages/server/src/api/controllers/static/index.ts b/packages/server/src/api/controllers/static/index.ts

index 5a3803e6d5..c718d5f704 100644

--- a/packages/server/src/api/controllers/static/index.ts

+++ b/packages/server/src/api/controllers/static/index.ts

@@ -170,6 +170,7 @@ export const serveApp = async function (ctx: Ctx) {

if (!env.isJest()) {

const plugins = objectStore.enrichPluginURLs(appInfo.usedPlugins)

const { head, html, css } = AppComponent.render({

+ title: branding?.platformTitle || `${appInfo.name}`,

metaImage:

branding?.metaImageUrl ||

"https://res.cloudinary.com/daog6scxm/image/upload/v1698759482/meta-images/plain-branded-meta-image-coral_ocxmgu.png",

diff --git a/packages/server/src/api/routes/tests/application.spec.ts b/packages/server/src/api/routes/tests/application.spec.ts

index dbe4eb51ae..dc235dbd01 100644

--- a/packages/server/src/api/routes/tests/application.spec.ts

+++ b/packages/server/src/api/routes/tests/application.spec.ts

@@ -184,7 +184,7 @@ describe("/applications", () => {

it("app should not sync if production", async () => {

const { message } = await config.api.application.sync(

app.appId.replace("_dev", ""),

- { statusCode: 400 }

+ { status: 400 }

)

expect(message).toEqual(

diff --git a/packages/server/src/api/routes/tests/attachment.spec.ts b/packages/server/src/api/routes/tests/attachment.spec.ts

index e230b0688a..aa02ea898e 100644

--- a/packages/server/src/api/routes/tests/attachment.spec.ts

+++ b/packages/server/src/api/routes/tests/attachment.spec.ts

@@ -29,7 +29,7 @@ describe("/api/applications/:appId/sync", () => {

let resp = (await config.api.attachment.process(

"ohno.exe",

Buffer.from([0]),

- { expectStatus: 400 }

+ { status: 400 }

)) as unknown as APIError

expect(resp.message).toContain("invalid extension")

})

@@ -40,7 +40,7 @@ describe("/api/applications/:appId/sync", () => {

let resp = (await config.api.attachment.process(

"OHNO.EXE",

Buffer.from([0]),

- { expectStatus: 400 }

+ { status: 400 }

)) as unknown as APIError

expect(resp.message).toContain("invalid extension")

})

@@ -51,7 +51,7 @@ describe("/api/applications/:appId/sync", () => {

undefined as any,

undefined as any,

{

- expectStatus: 400,

+ status: 400,

}

)) as unknown as APIError

expect(resp.message).toContain("No file provided")

diff --git a/packages/server/src/api/routes/tests/backup.spec.ts b/packages/server/src/api/routes/tests/backup.spec.ts

index becbeb5480..c862106d58 100644

--- a/packages/server/src/api/routes/tests/backup.spec.ts

+++ b/packages/server/src/api/routes/tests/backup.spec.ts

@@ -19,11 +19,8 @@ describe("/backups", () => {

describe("/api/backups/export", () => {

it("should be able to export app", async () => {

- const { body, headers } = await config.api.backup.exportBasicBackup(

- config.getAppId()!

- )

+ const body = await config.api.backup.exportBasicBackup(config.getAppId()!)

expect(body instanceof Buffer).toBe(true)

- expect(headers["content-type"]).toEqual("application/gzip")

expect(events.app.exported).toBeCalledTimes(1)

})

@@ -38,15 +35,13 @@ describe("/backups", () => {

it("should infer the app name from the app", async () => {

tk.freeze(mocks.date.MOCK_DATE)

- const { headers } = await config.api.backup.exportBasicBackup(

- config.getAppId()!

- )

-

- expect(headers["content-disposition"]).toEqual(

- `attachment; filename="${

- config.getApp().name

- }-export-${mocks.date.MOCK_DATE.getTime()}.tar.gz"`

- )

+ await config.api.backup.exportBasicBackup(config.getAppId()!, {

+ headers: {

+ "content-disposition": `attachment; filename="${

+ config.getApp().name

+ }-export-${mocks.date.MOCK_DATE.getTime()}.tar.gz"`,

+ },

+ })

})

})

diff --git a/packages/server/src/api/routes/tests/permissions.spec.ts b/packages/server/src/api/routes/tests/permissions.spec.ts

index 129bc00b44..1eabf6edbb 100644

--- a/packages/server/src/api/routes/tests/permissions.spec.ts

+++ b/packages/server/src/api/routes/tests/permissions.spec.ts

@@ -45,7 +45,7 @@ describe("/permission", () => {

table = (await config.createTable()) as typeof table

row = await config.createRow()

view = await config.api.viewV2.create({ tableId: table._id })

- perms = await config.api.permission.set({

+ perms = await config.api.permission.add({

roleId: STD_ROLE_ID,

resourceId: table._id,

level: PermissionLevel.READ,

@@ -88,13 +88,13 @@ describe("/permission", () => {

})

it("should get resource permissions with multiple roles", async () => {

- perms = await config.api.permission.set({

+ perms = await config.api.permission.add({

roleId: HIGHER_ROLE_ID,

resourceId: table._id,

level: PermissionLevel.WRITE,

})

const res = await config.api.permission.get(table._id)

- expect(res.body).toEqual({

+ expect(res).toEqual({

permissions: {

read: { permissionType: "EXPLICIT", role: STD_ROLE_ID },

write: { permissionType: "EXPLICIT", role: HIGHER_ROLE_ID },

@@ -117,16 +117,19 @@ describe("/permission", () => {

level: PermissionLevel.READ,

})

- const response = await config.api.permission.set(

+ await config.api.permission.add(

{

roleId: STD_ROLE_ID,

resourceId: table._id,

level: PermissionLevel.EXECUTE,

},

- { expectStatus: 403 }

- )

- expect(response.message).toEqual(

- "You are not allowed to 'read' the resource type 'datasource'"

+ {

+ status: 403,

+ body: {

+ message:

+ "You are not allowed to 'read' the resource type 'datasource'",

+ },

+ }

)

})

})

@@ -138,9 +141,9 @@ describe("/permission", () => {

resourceId: table._id,

level: PermissionLevel.READ,

})

- expect(res.body[0]._id).toEqual(STD_ROLE_ID)

+ expect(res[0]._id).toEqual(STD_ROLE_ID)

const permsRes = await config.api.permission.get(table._id)

- expect(permsRes.body[STD_ROLE_ID]).toBeUndefined()

+ expect(permsRes.permissions[STD_ROLE_ID]).toBeUndefined()

})

it("throw forbidden if the action is not allowed for the resource", async () => {

@@ -156,10 +159,13 @@ describe("/permission", () => {

resourceId: table._id,

level: PermissionLevel.EXECUTE,

},

- { expectStatus: 403 }

- )

- expect(response.body.message).toEqual(

- "You are not allowed to 'read' the resource type 'datasource'"

+ {

+ status: 403,

+ body: {

+ message:

+ "You are not allowed to 'read' the resource type 'datasource'",

+ },

+ }

)

})

})

@@ -181,10 +187,8 @@ describe("/permission", () => {

// replicate changes before checking permissions

await config.publish()

- const res = await config.api.viewV2.search(view.id, undefined, {

- usePublicUser: true,

- })

- expect(res.body.rows[0]._id).toEqual(row._id)

+ const res = await config.api.viewV2.publicSearch(view.id)

+ expect(res.rows[0]._id).toEqual(row._id)

})

it("should not be able to access the view data when the table is not public and there are no view permissions overrides", async () => {

@@ -196,14 +200,11 @@ describe("/permission", () => {

// replicate changes before checking permissions

await config.publish()

- await config.api.viewV2.search(view.id, undefined, {

- expectStatus: 403,

- usePublicUser: true,

- })

+ await config.api.viewV2.publicSearch(view.id, undefined, { status: 403 })

})

it("should ignore the view permissions if the flag is not on", async () => {

- await config.api.permission.set({

+ await config.api.permission.add({

roleId: STD_ROLE_ID,

resourceId: view.id,

level: PermissionLevel.READ,

@@ -216,15 +217,14 @@ describe("/permission", () => {

// replicate changes before checking permissions

await config.publish()

- await config.api.viewV2.search(view.id, undefined, {

- expectStatus: 403,

- usePublicUser: true,

+ await config.api.viewV2.publicSearch(view.id, undefined, {

+ status: 403,

})

})

it("should use the view permissions if the flag is on", async () => {

mocks.licenses.useViewPermissions()

- await config.api.permission.set({

+ await config.api.permission.add({

roleId: STD_ROLE_ID,

resourceId: view.id,

level: PermissionLevel.READ,

@@ -237,10 +237,8 @@ describe("/permission", () => {

// replicate changes before checking permissions

await config.publish()

- const res = await config.api.viewV2.search(view.id, undefined, {

- usePublicUser: true,

- })

- expect(res.body.rows[0]._id).toEqual(row._id)

+ const res = await config.api.viewV2.publicSearch(view.id)

+ expect(res.rows[0]._id).toEqual(row._id)

})

it("shouldn't allow writing from a public user", async () => {

@@ -277,7 +275,7 @@ describe("/permission", () => {

const res = await config.api.permission.get(legacyView.name)

- expect(res.body).toEqual({

+ expect(res).toEqual({

permissions: {

read: {

permissionType: "BASE",

diff --git a/packages/server/src/api/routes/tests/queries/query.seq.spec.ts b/packages/server/src/api/routes/tests/queries/query.seq.spec.ts

index 2bbc8366ea..c5cb188cbc 100644

--- a/packages/server/src/api/routes/tests/queries/query.seq.spec.ts

+++ b/packages/server/src/api/routes/tests/queries/query.seq.spec.ts

@@ -397,15 +397,16 @@ describe("/queries", () => {

})

it("should fail with invalid integration type", async () => {

- const response = await config.api.datasource.create(

- {

- ...basicDatasource().datasource,

- source: "INVALID_INTEGRATION" as SourceName,

+ const datasource: Datasource = {

+ ...basicDatasource().datasource,

+ source: "INVALID_INTEGRATION" as SourceName,

+ }

+ await config.api.datasource.create(datasource, {

+ status: 500,

+ body: {

+ message: "No datasource implementation found.",

},

- { expectStatus: 500, rawResponse: true }

- )

-

- expect(response.body.message).toBe("No datasource implementation found.")

+ })

})

})

diff --git a/packages/server/src/api/routes/tests/role.spec.js b/packages/server/src/api/routes/tests/role.spec.js

index a653b573b2..4575f9b213 100644

--- a/packages/server/src/api/routes/tests/role.spec.js

+++ b/packages/server/src/api/routes/tests/role.spec.js

@@ -93,7 +93,7 @@ describe("/roles", () => {

it("should be able to get the role with a permission added", async () => {

const table = await config.createTable()

- await config.api.permission.set({

+ await config.api.permission.add({

roleId: BUILTIN_ROLE_IDS.POWER,

resourceId: table._id,

level: PermissionLevel.READ,

diff --git a/packages/server/src/api/routes/tests/row.spec.ts b/packages/server/src/api/routes/tests/row.spec.ts

index 726e493b2d..c02159bb42 100644

--- a/packages/server/src/api/routes/tests/row.spec.ts

+++ b/packages/server/src/api/routes/tests/row.spec.ts

@@ -7,6 +7,7 @@ import { context, InternalTable, roles, tenancy } from "@budibase/backend-core"

import { quotas } from "@budibase/pro"

import {

AutoFieldSubType,

+ DeleteRow,

FieldSchema,

FieldType,

FieldTypeSubtypes,

@@ -106,9 +107,6 @@ describe.each([

mocks.licenses.useCloudFree()

})

- const loadRow = (id: string, tbl_Id: string, status = 200) =>

- config.api.row.get(tbl_Id, id, { expectStatus: status })

-

const getRowUsage = async () => {

const { total } = await config.doInContext(undefined, () =>

quotas.getCurrentUsageValues(QuotaUsageType.STATIC, StaticQuotaName.ROWS)

@@ -235,7 +233,7 @@ describe.each([

const res = await config.api.row.get(tableId, existing._id!)

- expect(res.body).toEqual({

+ expect(res).toEqual({

...existing,

...defaultRowFields,

})

@@ -265,7 +263,7 @@ describe.each([

await config.createRow()

await config.api.row.get(tableId, "1234567", {

- expectStatus: 404,

+ status: 404,

})

})

@@ -395,7 +393,7 @@ describe.each([

const createdRow = await config.createRow(row)

const id = createdRow._id!

- const saved = (await loadRow(id, table._id!)).body

+ const saved = await config.api.row.get(table._id!, id)

expect(saved.stringUndefined).toBe(undefined)

expect(saved.stringNull).toBe(null)

@@ -476,8 +474,8 @@ describe.each([

)

const row = await config.api.row.get(table._id!, createRowResponse._id!)

- expect(row.body.Story).toBeUndefined()

- expect(row.body).toEqual({

+ expect(row.Story).toBeUndefined()

+ expect(row).toEqual({

...defaultRowFields,

OrderID: 1111,

Country: "Aussy",

@@ -524,10 +522,10 @@ describe.each([

expect(row.name).toEqual("Updated Name")

expect(row.description).toEqual(existing.description)

- const savedRow = await loadRow(row._id!, table._id!)

+ const savedRow = await config.api.row.get(table._id!, row._id!)

- expect(savedRow.body.description).toEqual(existing.description)

- expect(savedRow.body.name).toEqual("Updated Name")

+ expect(savedRow.description).toEqual(existing.description)

+ expect(savedRow.name).toEqual("Updated Name")

await assertRowUsage(rowUsage)

})

@@ -543,7 +541,7 @@ describe.each([

tableId: table._id!,

name: 1,

},

- { expectStatus: 400 }

+ { status: 400 }

)

await assertRowUsage(rowUsage)

@@ -582,8 +580,8 @@ describe.each([

})

let getResp = await config.api.row.get(table._id!, row._id!)

- expect(getResp.body.user1[0]._id).toEqual(user1._id)

- expect(getResp.body.user2[0]._id).toEqual(user2._id)

+ expect(getResp.user1[0]._id).toEqual(user1._id)

+ expect(getResp.user2[0]._id).toEqual(user2._id)

let patchResp = await config.api.row.patch(table._id!, {

_id: row._id!,

@@ -595,8 +593,8 @@ describe.each([

expect(patchResp.user2[0]._id).toEqual(user2._id)

getResp = await config.api.row.get(table._id!, row._id!)

- expect(getResp.body.user1[0]._id).toEqual(user2._id)

- expect(getResp.body.user2[0]._id).toEqual(user2._id)

+ expect(getResp.user1[0]._id).toEqual(user2._id)

+ expect(getResp.user2[0]._id).toEqual(user2._id)

})

it("should be able to update relationships when both columns are same name", async () => {

@@ -609,7 +607,7 @@ describe.each([

description: "test",

relationship: [row._id],

})

- row = (await config.api.row.get(table._id!, row._id!)).body

+ row = await config.api.row.get(table._id!, row._id!)

expect(row.relationship.length).toBe(1)

const resp = await config.api.row.patch(table._id!, {

_id: row._id!,

@@ -632,8 +630,10 @@ describe.each([

const createdRow = await config.createRow()

const rowUsage = await getRowUsage()

- const res = await config.api.row.delete(table._id!, [createdRow])

- expect(res.body[0]._id).toEqual(createdRow._id)

+ const res = await config.api.row.bulkDelete(table._id!, {

+ rows: [createdRow],

+ })

+ expect(res[0]._id).toEqual(createdRow._id)

await assertRowUsage(rowUsage - 1)

})

})

@@ -682,10 +682,12 @@ describe.each([

const row2 = await config.createRow()

const rowUsage = await getRowUsage()

- const res = await config.api.row.delete(table._id!, [row1, row2])

+ const res = await config.api.row.bulkDelete(table._id!, {

+ rows: [row1, row2],

+ })

- expect(res.body.length).toEqual(2)

- await loadRow(row1._id!, table._id!, 404)

+ expect(res.length).toEqual(2)

+ await config.api.row.get(table._id!, row1._id!, { status: 404 })

await assertRowUsage(rowUsage - 2)

})

@@ -697,14 +699,12 @@ describe.each([

])

const rowUsage = await getRowUsage()

- const res = await config.api.row.delete(table._id!, [

- row1,

- row2._id,

- { _id: row3._id },

- ])

+ const res = await config.api.row.bulkDelete(table._id!, {

+ rows: [row1, row2._id!, { _id: row3._id }],

+ })

- expect(res.body.length).toEqual(3)

- await loadRow(row1._id!, table._id!, 404)

+ expect(res.length).toEqual(3)

+ await config.api.row.get(table._id!, row1._id!, { status: 404 })

await assertRowUsage(rowUsage - 3)

})

@@ -712,34 +712,36 @@ describe.each([

const row1 = await config.createRow()

const rowUsage = await getRowUsage()

- const res = await config.api.row.delete(table._id!, row1)

+ const res = await config.api.row.delete(table._id!, row1 as DeleteRow)

- expect(res.body.id).toEqual(row1._id)

- await loadRow(row1._id!, table._id!, 404)

+ expect(res.id).toEqual(row1._id)

+ await config.api.row.get(table._id!, row1._id!, { status: 404 })

await assertRowUsage(rowUsage - 1)

})

it("Should ignore malformed/invalid delete requests", async () => {

const rowUsage = await getRowUsage()

- const res = await config.api.row.delete(

- table._id!,

- { not: "valid" },

- { expectStatus: 400 }

- )

- expect(res.body.message).toEqual("Invalid delete rows request")

-

- const res2 = await config.api.row.delete(

- table._id!,

- { rows: 123 },

- { expectStatus: 400 }

- )

- expect(res2.body.message).toEqual("Invalid delete rows request")

-

- const res3 = await config.api.row.delete(table._id!, "invalid", {

- expectStatus: 400,

+ await config.api.row.delete(table._id!, { not: "valid" } as any, {

+ status: 400,

+ body: {

+ message: "Invalid delete rows request",

+ },

+ })

+

+ await config.api.row.delete(table._id!, { rows: 123 } as any, {

+ status: 400,

+ body: {

+ message: "Invalid delete rows request",

+ },

+ })

+

+ await config.api.row.delete(table._id!, "invalid" as any, {

+ status: 400,

+ body: {

+ message: "Invalid delete rows request",

+ },

})

- expect(res3.body.message).toEqual("Invalid delete rows request")

await assertRowUsage(rowUsage)

})

@@ -757,16 +759,16 @@ describe.each([

const row = await config.createRow()

const rowUsage = await getRowUsage()

- const res = await config.api.legacyView.get(table._id!)

- expect(res.body.length).toEqual(1)

- expect(res.body[0]._id).toEqual(row._id)

+ const rows = await config.api.legacyView.get(table._id!)

+ expect(rows.length).toEqual(1)

+ expect(rows[0]._id).toEqual(row._id)

await assertRowUsage(rowUsage)

})

it("should throw an error if view doesn't exist", async () => {

const rowUsage = await getRowUsage()

- await config.api.legacyView.get("derp", { expectStatus: 404 })

+ await config.api.legacyView.get("derp", { status: 404 })

await assertRowUsage(rowUsage)

})

@@ -781,9 +783,9 @@ describe.each([

const row = await config.createRow()

const rowUsage = await getRowUsage()

- const res = await config.api.legacyView.get(view.name)

- expect(res.body.length).toEqual(1)

- expect(res.body[0]._id).toEqual(row._id)

+ const rows = await config.api.legacyView.get(view.name)

+ expect(rows.length).toEqual(1)

+ expect(rows[0]._id).toEqual(row._id)

await assertRowUsage(rowUsage)

})

@@ -841,8 +843,8 @@ describe.each([

linkedTable._id!,

secondRow._id!

)

- expect(resBasic.body.link.length).toBe(1)

- expect(resBasic.body.link[0]).toEqual({

+ expect(resBasic.link.length).toBe(1)

+ expect(resBasic.link[0]).toEqual({

_id: firstRow._id,

primaryDisplay: firstRow.name,

})

@@ -852,10 +854,10 @@ describe.each([

linkedTable._id!,

secondRow._id!

)

- expect(resEnriched.body.link.length).toBe(1)

- expect(resEnriched.body.link[0]._id).toBe(firstRow._id)

- expect(resEnriched.body.link[0].name).toBe("Test Contact")

- expect(resEnriched.body.link[0].description).toBe("original description")

+ expect(resEnriched.link.length).toBe(1)

+ expect(resEnriched.link[0]._id).toBe(firstRow._id)

+ expect(resEnriched.link[0].name).toBe("Test Contact")

+ expect(resEnriched.link[0].description).toBe("original description")

await assertRowUsage(rowUsage)

})

})

@@ -903,7 +905,7 @@ describe.each([

const res = await config.api.row.exportRows(table._id!, {

rows: [existing._id!],

})

- const results = JSON.parse(res.text)

+ const results = JSON.parse(res)

expect(results.length).toEqual(1)

const row = results[0]

@@ -922,7 +924,7 @@ describe.each([

rows: [existing._id!],

columns: ["_id"],

})

- const results = JSON.parse(res.text)

+ const results = JSON.parse(res)

expect(results.length).toEqual(1)

const row = results[0]

@@ -1000,7 +1002,7 @@ describe.each([

})

const row = await config.api.row.get(table._id!, newRow._id!)

- expect(row.body).toEqual({

+ expect(row).toEqual({

name: data.name,

surname: data.surname,

address: data.address,

@@ -1010,9 +1012,9 @@ describe.each([

id: newRow.id,

...defaultRowFields,

})

- expect(row.body._viewId).toBeUndefined()

- expect(row.body.age).toBeUndefined()

- expect(row.body.jobTitle).toBeUndefined()

+ expect(row._viewId).toBeUndefined()

+ expect(row.age).toBeUndefined()

+ expect(row.jobTitle).toBeUndefined()

})

})

@@ -1042,7 +1044,7 @@ describe.each([

})

const row = await config.api.row.get(tableId, newRow._id!)

- expect(row.body).toEqual({

+ expect(row).toEqual({

...newRow,

name: newData.name,

address: newData.address,

@@ -1051,9 +1053,9 @@ describe.each([

id: newRow.id,

...defaultRowFields,

})

- expect(row.body._viewId).toBeUndefined()

- expect(row.body.age).toBeUndefined()

- expect(row.body.jobTitle).toBeUndefined()

+ expect(row._viewId).toBeUndefined()

+ expect(row.age).toBeUndefined()

+ expect(row.jobTitle).toBeUndefined()

})

})

@@ -1071,12 +1073,12 @@ describe.each([

const createdRow = await config.createRow()

const rowUsage = await getRowUsage()

- await config.api.row.delete(view.id, [createdRow])

+ await config.api.row.bulkDelete(view.id, { rows: [createdRow] })

await assertRowUsage(rowUsage - 1)

await config.api.row.get(tableId, createdRow._id!, {

- expectStatus: 404,

+ status: 404,

})

})

@@ -1097,17 +1099,17 @@ describe.each([

])

const rowUsage = await getRowUsage()

- await config.api.row.delete(view.id, [rows[0], rows[2]])

+ await config.api.row.bulkDelete(view.id, { rows: [rows[0], rows[2]] })

await assertRowUsage(rowUsage - 2)

await config.api.row.get(tableId, rows[0]._id!, {

- expectStatus: 404,

+ status: 404,

})

await config.api.row.get(tableId, rows[2]._id!, {

- expectStatus: 404,

+ status: 404,

})

- await config.api.row.get(tableId, rows[1]._id!, { expectStatus: 200 })

+ await config.api.row.get(tableId, rows[1]._id!, { status: 200 })

})

})

@@ -1154,8 +1156,8 @@ describe.each([

const createViewResponse = await config.createView()

const response = await config.api.viewV2.search(createViewResponse.id)

- expect(response.body.rows).toHaveLength(10)

- expect(response.body).toEqual({

+ expect(response.rows).toHaveLength(10)

+ expect(response).toEqual({

rows: expect.arrayContaining(

rows.map(r => ({

_viewId: createViewResponse.id,

@@ -1206,8 +1208,8 @@ describe.each([

const response = await config.api.viewV2.search(createViewResponse.id)

- expect(response.body.rows).toHaveLength(5)

- expect(response.body).toEqual({

+ expect(response.rows).toHaveLength(5)

+ expect(response).toEqual({

rows: expect.arrayContaining(

expectedRows.map(r => ({

_viewId: createViewResponse.id,

@@ -1328,8 +1330,8 @@ describe.each([

createViewResponse.id

)

- expect(response.body.rows).toHaveLength(4)

- expect(response.body.rows).toEqual(

+ expect(response.rows).toHaveLength(4)

+ expect(response.rows).toEqual(

expected.map(name => expect.objectContaining({ name }))

)

}

@@ -1357,8 +1359,8 @@ describe.each([

}

)

- expect(response.body.rows).toHaveLength(4)

- expect(response.body.rows).toEqual(

+ expect(response.rows).toHaveLength(4)

+ expect(response.rows).toEqual(

expected.map(name => expect.objectContaining({ name }))

)

}

@@ -1382,8 +1384,8 @@ describe.each([

})

const response = await config.api.viewV2.search(view.id)

- expect(response.body.rows).toHaveLength(10)

- expect(response.body.rows).toEqual(

+ expect(response.rows).toHaveLength(10)

+ expect(response.rows).toEqual(

expect.arrayContaining(

rows.map(r => ({

...(isInternal

@@ -1402,7 +1404,7 @@ describe.each([

const createViewResponse = await config.createView()

const response = await config.api.viewV2.search(createViewResponse.id)

- expect(response.body.rows).toHaveLength(0)

+ expect(response.rows).toHaveLength(0)

})

it("respects the limit parameter", async () => {

@@ -1417,7 +1419,7 @@ describe.each([

query: {},

})

- expect(response.body.rows).toHaveLength(limit)

+ expect(response.rows).toHaveLength(limit)

})

it("can handle pagination", async () => {

@@ -1426,7 +1428,7 @@ describe.each([

const createViewResponse = await config.createView()

const allRows = (await config.api.viewV2.search(createViewResponse.id))

- .body.rows

+ .rows

const firstPageResponse = await config.api.viewV2.search(

createViewResponse.id,

@@ -1436,7 +1438,7 @@ describe.each([

query: {},

}

)

- expect(firstPageResponse.body).toEqual({

+ expect(firstPageResponse).toEqual({

rows: expect.arrayContaining(allRows.slice(0, 4)),

totalRows: isInternal ? 10 : undefined,

hasNextPage: true,

@@ -1448,12 +1450,12 @@ describe.each([

{

paginate: true,

limit: 4,

- bookmark: firstPageResponse.body.bookmark,

+ bookmark: firstPageResponse.bookmark,

query: {},

}

)

- expect(secondPageResponse.body).toEqual({

+ expect(secondPageResponse).toEqual({

rows: expect.arrayContaining(allRows.slice(4, 8)),

totalRows: isInternal ? 10 : undefined,

hasNextPage: true,

@@ -1465,11 +1467,11 @@ describe.each([

{

paginate: true,

limit: 4,

- bookmark: secondPageResponse.body.bookmark,

+ bookmark: secondPageResponse.bookmark,

query: {},

}

)

- expect(lastPageResponse.body).toEqual({

+ expect(lastPageResponse).toEqual({

rows: expect.arrayContaining(allRows.slice(8)),

totalRows: isInternal ? 10 : undefined,

hasNextPage: false,

@@ -1489,7 +1491,7 @@ describe.each([

email: "joe@joe.com",

roles: {},

},

- { expectStatus: 400 }

+ { status: 400 }

)

expect(response.message).toBe("Cannot create new user entry.")

})

@@ -1516,58 +1518,52 @@ describe.each([

it("does not allow public users to fetch by default", async () => {

await config.publish()

- await config.api.viewV2.search(viewId, undefined, {

- expectStatus: 403,

- usePublicUser: true,

+ await config.api.viewV2.publicSearch(viewId, undefined, {

+ status: 403,

})

})

it("allow public users to fetch when permissions are explicit", async () => {

- await config.api.permission.set({

+ await config.api.permission.add({

roleId: roles.BUILTIN_ROLE_IDS.PUBLIC,

level: PermissionLevel.READ,

resourceId: viewId,

})

await config.publish()

- const response = await config.api.viewV2.search(viewId, undefined, {

- usePublicUser: true,

- })

+ const response = await config.api.viewV2.publicSearch(viewId)

- expect(response.body.rows).toHaveLength(10)

+ expect(response.rows).toHaveLength(10)

})

it("allow public users to fetch when permissions are inherited", async () => {

- await config.api.permission.set({

+ await config.api.permission.add({

roleId: roles.BUILTIN_ROLE_IDS.PUBLIC,

level: PermissionLevel.READ,

resourceId: tableId,

})

await config.publish()

- const response = await config.api.viewV2.search(viewId, undefined, {

- usePublicUser: true,

- })

+ const response = await config.api.viewV2.publicSearch(viewId)

- expect(response.body.rows).toHaveLength(10)

+ expect(response.rows).toHaveLength(10)

})

it("respects inherited permissions, not allowing not public views from public tables", async () => {

- await config.api.permission.set({

+ await config.api.permission.add({

roleId: roles.BUILTIN_ROLE_IDS.PUBLIC,

level: PermissionLevel.READ,

resourceId: tableId,

})

- await config.api.permission.set({

+ await config.api.permission.add({

roleId: roles.BUILTIN_ROLE_IDS.POWER,

level: PermissionLevel.READ,

resourceId: viewId,

})

await config.publish()

- await config.api.viewV2.search(viewId, undefined, {

- usePublicUser: true,

- expectStatus: 403,

+ await config.api.viewV2.publicSearch(viewId, undefined, {

+ status: 403,

})

})

})

@@ -1754,7 +1750,7 @@ describe.each([

}

const row = await config.api.row.save(tableId, rowData)

- const { body: retrieved } = await config.api.row.get(tableId, row._id!)

+ const retrieved = await config.api.row.get(tableId, row._id!)

expect(retrieved).toEqual({

name: rowData.name,

description: rowData.description,

@@ -1781,7 +1777,7 @@ describe.each([

}

const row = await config.api.row.save(tableId, rowData)

- const { body: retrieved } = await config.api.row.get(tableId, row._id!)

+ const retrieved = await config.api.row.get(tableId, row._id!)

expect(retrieved).toEqual({

name: rowData.name,

description: rowData.description,

diff --git a/packages/server/src/api/routes/tests/table.spec.ts b/packages/server/src/api/routes/tests/table.spec.ts

index ce119e56f0..29465145a9 100644

--- a/packages/server/src/api/routes/tests/table.spec.ts

+++ b/packages/server/src/api/routes/tests/table.spec.ts

@@ -663,8 +663,7 @@ describe("/tables", () => {

expect(migratedTable.schema["user column"]).toBeDefined()

expect(migratedTable.schema["user relationship"]).not.toBeDefined()

- const resp = await config.api.row.get(table._id!, testRow._id!)

- const migratedRow = resp.body as Row

+ const migratedRow = await config.api.row.get(table._id!, testRow._id!)

expect(migratedRow["user column"]).toBeDefined()

expect(migratedRow["user relationship"]).not.toBeDefined()

@@ -716,15 +715,13 @@ describe("/tables", () => {

expect(migratedTable.schema["user column"]).toBeDefined()

expect(migratedTable.schema["user relationship"]).not.toBeDefined()

- const row1Migrated = (await config.api.row.get(table._id!, row1._id!))

- .body as Row

+ const row1Migrated = await config.api.row.get(table._id!, row1._id!)

expect(row1Migrated["user relationship"]).not.toBeDefined()

expect(row1Migrated["user column"].map((r: Row) => r._id)).toEqual(

expect.arrayContaining([users[0]._id, users[1]._id])

)

- const row2Migrated = (await config.api.row.get(table._id!, row2._id!))

- .body as Row

+ const row2Migrated = await config.api.row.get(table._id!, row2._id!)

expect(row2Migrated["user relationship"]).not.toBeDefined()

expect(row2Migrated["user column"].map((r: Row) => r._id)).toEqual(

expect.arrayContaining([users[1]._id, users[2]._id])

@@ -773,15 +770,13 @@ describe("/tables", () => {

expect(migratedTable.schema["user column"]).toBeDefined()

expect(migratedTable.schema["user relationship"]).not.toBeDefined()

- const row1Migrated = (await config.api.row.get(table._id!, row1._id!))

- .body as Row

+ const row1Migrated = await config.api.row.get(table._id!, row1._id!)

expect(row1Migrated["user relationship"]).not.toBeDefined()

expect(row1Migrated["user column"].map((r: Row) => r._id)).toEqual(

expect.arrayContaining([users[0]._id, users[1]._id])

)

- const row2Migrated = (await config.api.row.get(table._id!, row2._id!))

- .body as Row

+ const row2Migrated = await config.api.row.get(table._id!, row2._id!)

expect(row2Migrated["user relationship"]).not.toBeDefined()

expect(row2Migrated["user column"].map((r: Row) => r._id)).toEqual([

users[2]._id,

@@ -831,7 +826,7 @@ describe("/tables", () => {

subtype: FieldSubtype.USERS,

},

},

- { expectStatus: 400 }

+ { status: 400 }

)

})

@@ -846,7 +841,7 @@ describe("/tables", () => {

subtype: FieldSubtype.USERS,

},

},

- { expectStatus: 400 }

+ { status: 400 }

)

})

@@ -861,7 +856,7 @@ describe("/tables", () => {

subtype: FieldSubtype.USERS,

},

},

- { expectStatus: 400 }

+ { status: 400 }

)

})

@@ -880,7 +875,7 @@ describe("/tables", () => {

subtype: FieldSubtype.USERS,

},

},

- { expectStatus: 400 }

+ { status: 400 }

)

})

})

diff --git a/packages/server/src/api/routes/tests/user.spec.ts b/packages/server/src/api/routes/tests/user.spec.ts

index 076ee064dc..ff8c0d54b3 100644

--- a/packages/server/src/api/routes/tests/user.spec.ts

+++ b/packages/server/src/api/routes/tests/user.spec.ts

@@ -90,7 +90,7 @@ describe("/users", () => {

})

await config.api.user.update(

{ ...user, roleId: roles.BUILTIN_ROLE_IDS.POWER },

- { expectStatus: 409 }

+ { status: 409 }

)

})

})

diff --git a/packages/server/src/api/routes/tests/viewV2.spec.ts b/packages/server/src/api/routes/tests/viewV2.spec.ts

index b03a73ddda..5198e63338 100644

--- a/packages/server/src/api/routes/tests/viewV2.spec.ts

+++ b/packages/server/src/api/routes/tests/viewV2.spec.ts

@@ -177,7 +177,7 @@ describe.each([

}

await config.api.viewV2.create(newView, {

- expectStatus: 201,

+ status: 201,

})

})

})

@@ -275,7 +275,7 @@ describe.each([

const tableId = table._id!

await config.api.viewV2.update(

{ ...view, id: generator.guid() },

- { expectStatus: 404 }

+ { status: 404 }

)

expect(await config.api.table.get(tableId)).toEqual(

@@ -304,7 +304,7 @@ describe.each([

},

],

},

- { expectStatus: 404 }

+ { status: 404 }

)

expect(await config.api.table.get(tableId)).toEqual(

@@ -326,12 +326,10 @@ describe.each([

...viewV1,

},

{

- expectStatus: 400,

- handleResponse: r => {

- expect(r.body).toEqual({

- message: "Only views V2 can be updated",

- status: 400,

- })

+ status: 400,

+ body: {

+ message: "Only views V2 can be updated",

+ status: 400,

},

}

)

@@ -403,7 +401,7 @@ describe.each([

} as Record,

},

{

- expectStatus: 200,

+ status: 200,

}

)

})

diff --git a/packages/server/src/appMigrations/tests/migrations.spec.ts b/packages/server/src/appMigrations/tests/migrations.spec.ts

index 5eb8535695..7af2346934 100644

--- a/packages/server/src/appMigrations/tests/migrations.spec.ts

+++ b/packages/server/src/appMigrations/tests/migrations.spec.ts

@@ -30,9 +30,9 @@ describe("migrations", () => {

const appId = config.getAppId()

- const response = await config.api.application.getRaw(appId)

-

- expect(response.headers[Header.MIGRATING_APP]).toBeUndefined()

+ await config.api.application.get(appId, {

+ headersNotPresent: [Header.MIGRATING_APP],

+ })

})

it("accessing an app that has pending migrations will attach the migrating header", async () => {

@@ -46,8 +46,10 @@ describe("migrations", () => {

func: async () => {},

})

- const response = await config.api.application.getRaw(appId)

-

- expect(response.headers[Header.MIGRATING_APP]).toEqual(appId)

+ await config.api.application.get(appId, {

+ headers: {

+ [Header.MIGRATING_APP]: appId,

+ },

+ })

})

})

diff --git a/packages/server/src/automations/tests/createRow.spec.ts b/packages/server/src/automations/tests/createRow.spec.ts

index 0615fcdd97..0098be39a5 100644

--- a/packages/server/src/automations/tests/createRow.spec.ts

+++ b/packages/server/src/automations/tests/createRow.spec.ts

@@ -24,7 +24,7 @@ describe("test the create row action", () => {

expect(res.id).toBeDefined()

expect(res.revision).toBeDefined()

expect(res.success).toEqual(true)

- const gottenRow = await config.getRow(table._id, res.id)

+ const gottenRow = await config.api.row.get(table._id, res.id)

expect(gottenRow.name).toEqual("test")

expect(gottenRow.description).toEqual("test")

})

diff --git a/packages/server/src/automations/tests/updateRow.spec.ts b/packages/server/src/automations/tests/updateRow.spec.ts

index b64c52147d..76823e8a11 100644

--- a/packages/server/src/automations/tests/updateRow.spec.ts

+++ b/packages/server/src/automations/tests/updateRow.spec.ts

@@ -36,7 +36,7 @@ describe("test the update row action", () => {

it("should be able to run the action", async () => {

const res = await setup.runStep(setup.actions.UPDATE_ROW.stepId, inputs)

expect(res.success).toEqual(true)

- const updatedRow = await config.getRow(table._id!, res.id)

+ const updatedRow = await config.api.row.get(table._id!, res.id)

expect(updatedRow.name).toEqual("Updated name")

expect(updatedRow.description).not.toEqual("")

})

@@ -87,8 +87,8 @@ describe("test the update row action", () => {

})

let getResp = await config.api.row.get(table._id!, row._id!)

- expect(getResp.body.user1[0]._id).toEqual(user1._id)

- expect(getResp.body.user2[0]._id).toEqual(user2._id)

+ expect(getResp.user1[0]._id).toEqual(user1._id)

+ expect(getResp.user2[0]._id).toEqual(user2._id)

let stepResp = await setup.runStep(setup.actions.UPDATE_ROW.stepId, {

rowId: row._id,

@@ -103,8 +103,8 @@ describe("test the update row action", () => {

expect(stepResp.success).toEqual(true)

getResp = await config.api.row.get(table._id!, row._id!)

- expect(getResp.body.user1[0]._id).toEqual(user2._id)

- expect(getResp.body.user2[0]._id).toEqual(user2._id)

+ expect(getResp.user1[0]._id).toEqual(user2._id)

+ expect(getResp.user2[0]._id).toEqual(user2._id)

})

it("should overwrite links if those links are not set and we ask it do", async () => {

@@ -140,8 +140,8 @@ describe("test the update row action", () => {

})

let getResp = await config.api.row.get(table._id!, row._id!)

- expect(getResp.body.user1[0]._id).toEqual(user1._id)

- expect(getResp.body.user2[0]._id).toEqual(user2._id)

+ expect(getResp.user1[0]._id).toEqual(user1._id)

+ expect(getResp.user2[0]._id).toEqual(user2._id)

let stepResp = await setup.runStep(setup.actions.UPDATE_ROW.stepId, {

rowId: row._id,

@@ -163,7 +163,7 @@ describe("test the update row action", () => {

expect(stepResp.success).toEqual(true)

getResp = await config.api.row.get(table._id!, row._id!)

- expect(getResp.body.user1[0]._id).toEqual(user2._id)

- expect(getResp.body.user2).toBeUndefined()

+ expect(getResp.user1[0]._id).toEqual(user2._id)

+ expect(getResp.user2).toBeUndefined()

})

})

diff --git a/packages/server/src/db/tests/linkController.spec.ts b/packages/server/src/db/tests/linkController.spec.ts

index ae1922db27..4f41fd3838 100644

--- a/packages/server/src/db/tests/linkController.spec.ts

+++ b/packages/server/src/db/tests/linkController.spec.ts

@@ -100,7 +100,7 @@ describe("test the link controller", () => {

const { _id } = await config.createRow(

basicLinkedRow(t1._id!, row._id!, linkField)

)

- return config.getRow(t1._id!, _id!)

+ return config.api.row.get(t1._id!, _id!)

}

it("should be able to confirm if two table schemas are equal", async () => {

diff --git a/packages/server/src/environment.ts b/packages/server/src/environment.ts

index d0b7e91401..a7c6df29ea 100644

--- a/packages/server/src/environment.ts

+++ b/packages/server/src/environment.ts

@@ -76,13 +76,16 @@ const environment = {

DEFAULTS.AUTOMATION_THREAD_TIMEOUT > QUERY_THREAD_TIMEOUT

? DEFAULTS.AUTOMATION_THREAD_TIMEOUT

: QUERY_THREAD_TIMEOUT,

- SQL_MAX_ROWS: process.env.SQL_MAX_ROWS,

BB_ADMIN_USER_EMAIL: process.env.BB_ADMIN_USER_EMAIL,

BB_ADMIN_USER_PASSWORD: process.env.BB_ADMIN_USER_PASSWORD,

PLUGINS_DIR: process.env.PLUGINS_DIR || DEFAULTS.PLUGINS_DIR,

OPENAI_API_KEY: process.env.OPENAI_API_KEY,

MAX_IMPORT_SIZE_MB: process.env.MAX_IMPORT_SIZE_MB,

SESSION_EXPIRY_SECONDS: process.env.SESSION_EXPIRY_SECONDS,

+ // SQL

+ SQL_MAX_ROWS: process.env.SQL_MAX_ROWS,

+ SQL_LOGGING_ENABLE: process.env.SQL_LOGGING_ENABLE,

+ SQL_ALIASING_DISABLE: process.env.SQL_ALIASING_DISABLE,

// flags

ALLOW_DEV_AUTOMATIONS: process.env.ALLOW_DEV_AUTOMATIONS,

DISABLE_THREADING: process.env.DISABLE_THREADING,

diff --git a/packages/server/src/integration-test/postgres.spec.ts b/packages/server/src/integration-test/postgres.spec.ts

index 0031fe1136..7c14bc2b69 100644

--- a/packages/server/src/integration-test/postgres.spec.ts

+++ b/packages/server/src/integration-test/postgres.spec.ts

@@ -398,7 +398,7 @@ describe("postgres integrations", () => {

expect(res.status).toBe(200)

expect(res.body).toEqual(updatedRow)

- const persistedRow = await config.getRow(

+ const persistedRow = await config.api.row.get(

primaryPostgresTable._id!,

row.id

)

@@ -1040,28 +1040,37 @@ describe("postgres integrations", () => {

describe("POST /api/datasources/verify", () => {

it("should be able to verify the connection", async () => {

- const response = await config.api.datasource.verify({

- datasource: await databaseTestProviders.postgres.datasource(),

- })

- expect(response.status).toBe(200)

- expect(response.body.connected).toBe(true)

+ await config.api.datasource.verify(

+ {

+ datasource: await databaseTestProviders.postgres.datasource(),

+ },

+ {

+ body: {

+ connected: true,

+ },

+ }

+ )

})

it("should state an invalid datasource cannot connect", async () => {

const dbConfig = await databaseTestProviders.postgres.datasource()

- const response = await config.api.datasource.verify({

- datasource: {

- ...dbConfig,

- config: {

- ...dbConfig.config,

- password: "wrongpassword",

+ await config.api.datasource.verify(

+ {

+ datasource: {

+ ...dbConfig,

+ config: {

+ ...dbConfig.config,

+ password: "wrongpassword",

+ },

},

},

- })

-

- expect(response.status).toBe(200)

- expect(response.body.connected).toBe(false)

- expect(response.body.error).toBeDefined()

+ {

+ body: {

+ connected: false,

+ error: 'password authentication failed for user "postgres"',

+ },

+ }

+ )

})

})

diff --git a/packages/server/src/integrations/base/query.ts b/packages/server/src/integrations/base/query.ts

index 4f31e37744..b906ecbb1b 100644

--- a/packages/server/src/integrations/base/query.ts

+++ b/packages/server/src/integrations/base/query.ts

@@ -1,11 +1,15 @@

-import { QueryJson, Datasource } from "@budibase/types"

+import {

+ QueryJson,

+ Datasource,

+ DatasourcePlusQueryResponse,

+} from "@budibase/types"

import { getIntegration } from "../index"

import sdk from "../../sdk"

export async function makeExternalQuery(

datasource: Datasource,

json: QueryJson

-) {

+): DatasourcePlusQueryResponse {

datasource = await sdk.datasources.enrich(datasource)

const Integration = await getIntegration(datasource.source)

// query is the opinionated function

diff --git a/packages/server/src/integrations/base/sql.ts b/packages/server/src/integrations/base/sql.ts

index e52e9dd2ae..6605052598 100644

--- a/packages/server/src/integrations/base/sql.ts

+++ b/packages/server/src/integrations/base/sql.ts

@@ -17,7 +17,6 @@ const envLimit = environment.SQL_MAX_ROWS

: null

const BASE_LIMIT = envLimit || 5000

-type KnexQuery = Knex.QueryBuilder | Knex

// these are invalid dates sent by the client, need to convert them to a real max date

const MIN_ISO_DATE = "0000-00-00T00:00:00.000Z"

const MAX_ISO_DATE = "9999-00-00T00:00:00.000Z"

@@ -127,10 +126,15 @@ class InternalBuilder {

// right now we only do filters on the specific table being queried

addFilters(

- query: KnexQuery,

+ query: Knex.QueryBuilder,

filters: SearchFilters | undefined,

- opts: { relationship?: boolean; tableName?: string }

- ): KnexQuery {

+ tableName: string,

+ opts: { aliases?: Record; relationship?: boolean }

+ ): Knex.QueryBuilder {

+ function getTableName(name: string) {

+ const alias = opts.aliases?.[name]

+ return alias || name

+ }

function iterate(

structure: { [key: string]: any },

fn: (key: string, value: any) => void

@@ -139,10 +143,11 @@ class InternalBuilder {

const updatedKey = dbCore.removeKeyNumbering(key)

const isRelationshipField = updatedKey.includes(".")

if (!opts.relationship && !isRelationshipField) {

- fn(`${opts.tableName}.${updatedKey}`, value)

+ fn(`${getTableName(tableName)}.${updatedKey}`, value)

}

if (opts.relationship && isRelationshipField) {

- fn(updatedKey, value)

+ const [filterTableName, property] = updatedKey.split(".")

+ fn(`${getTableName(filterTableName)}.${property}`, value)

}

}

}